1-bit Stochastic Gradient Descent (1-bit SGD)

1-bit Stochastic Gradient Descent is a technique from Microsoft Research aimed at increasing the data parallelism inherent in training deep neural networks. They describe the technique in the paper 1-Bit Stochastic Gradient Descent and Application to Data-Parallel Distributed Training of Speech DNNs.

They accelerate training neural networks with stochastic gradient descent by:

- splitting up the computation for each minibatch across many nodes in a distributed system.

- reducing the bandwidth requirements for communication between nodes by exchanging gradients (instead of model parameters) and quantizing those gradients all the way to just 1 bit.

- they add the quantization error from Step 2 into the next minibatch gradient before quantization.

1-bit Stochastic Gradient Descent is available is a technique in Microsoft’s Cognitive Toolkit (CNTK).

References

1x1 Convolution

A 1x1 convolution or a network in network is an architectural technique used in some convolutional neural networks.

The technique was first described in the paper Network In Network.

A 1x1 convolution is a convolutional layer where the filter is of dimension .

The filter takes in a tensor of dimension , over the values in the third dimension and outputting a matrix. Subsequently, an activation function (like ReLU) is applied to the output matrix.

If we have filters, then the output of the layer is a tensor of dimension . This is useful if the number of channels in the previous layer of the network has grown too large and needs to be altered to channels.

The convolution technique was featured in paper introducing the Inception network architecture, titled Going Deeper With Convolutions.

References

Abscissa

Abscissa is an obscure term for referring to the horizontal axis (the x-axis) on a two-dimensional coordinate plane.

Abstractive sentence summarization

Abstractive sentence summarization refers to creating a shorter version of a sentence with the same meaning.

This is in contrast to extractive sentence summarization, which pulls the most informative sentences from a document.

Related Terms

ACCAMS

ACCAMS: Additive Co-Clustering to Approximate Matrices Succinctly

Related Terms

References

Activation function

In neural networks, an activation function defines the output of a neuron.

The activation function takes the dot product of the input to the neuron () and the weights ().

Typically activation functions are nonlinear, as that allows the network to approximate a wider variety of functions.

Related Terms

References

- Activation function - Wikipedia

(en.wikipedia.org) - Commonly used activation functions - Stanford CS231n notes

(cs231n.github.io)

AdaBoost

AdaBoost, short for Adaptive Boosting, aka Weight Boosted Trees, uses the same training set over and over. Increases the margin (separation) like SVMs.

ADADELTA

ADADELTA is a gradient descent-based optimization algorithm. Like AdaGrad, ADADELTA automatically tunes the learning rate.

References

AdaGrad

AdaGrad is a gradient-descent based optimization algorithm. It automatically tunes the learning rate based on its observations of the data’s geometry. AdaGrad is designed to perform well with datasets that have infrequently-occurring features.

Related Terms

References

- Adaptive Subgradient Methods for Online Learning and Stochastic Optimization

(jmlr.org) - An overview of gradient descent optimization algorithms - Sebiastian Ruder

(sebastianruder.com)

ADAM Optimizer

ADAM, or Adaptive Moment Estimation, is a stochastic optimization method introduced by Diederik P. Kingma and Jimmy Lei Ba.

They intended to combine the advantages of Adagrad’s handling of sparse gradients and RMSProp’s handling of non-stationary environments.

Related Terms

References

- Adam: A Method for Stochastic Optimization

(arxiv.org) - ADAM - An overview of gradient descent optimization algorithms - Sebastian Ruder

(sebastianruder.com)

Adaptive learning rate

The term adaptive learning rate refers to variants of stochastic gradient descent with learning rates that change over the course of the algorithm’s execution.

Allowing the learning rate to change dynamically eliminates the need to pick a “good” static learning rate, and can lead to faster training and a trained model with better performance.

Some adaptive learning rate algorithms are: - Adagrad - ADADELTA - ADAM

Additive clustering

Additive model

Related Terms

References

Adversarial autoencoder

Related Terms

References

Adversarial Variational Bayes

References

Affine space

Related Terms

Affinity analysis

Related Terms

References

Affinity propagation clustering

References

AlexNet

AlexNet is a convolutional neural network architecture proposed by Alex Krizhevsky, Ilya Sutskever, and Geoffrey Hinton in 2012.

At the time, it achieved state-of-the-art performance on the test set for the 2010 ImageNet Large Scale Visual Recognition Competition (LSVRC). A variant of the model won the 2012 ImageNet LSVRC with a top-5 test error rate of 15.3%–ten percentage points ahead of the second place winner.

Related Terms

References

Alternating conditional expectation (ACE) algorithm

References

Anchor box

Anchor boxes are a technique used in some computer vision object detection algorithms to help identify objects of different shapes.

Anchor boxes are hand-picked boxes of different height/width ratios (for 2-dimensional boxes) designed to match the relative ratios of the object classes being detected. For example, an object detector that detects cars and people may have a wide anchor box to detect cars and a tall, narrow box to detect people.

The Fast R-CNN paper introduced the idea of using the -means-clustering to automatically determine the appropriate anchor box dimensions for a given number of anchor boxes.

References

Antonym

Association rule mining

Related Terms

References

- Association rule learning - Wikipedia

(en.wikipedia.org) - Mining association rules between sets of items in large databases

(dl.acm.org)

Attention Mechanism

Related Terms

Autocorrelation matrix

References

Autoencoder

Autoencoders are an unsupervised learning model that aim to learn distributed representations of data.

Typically an autoencoder is a neural network trained to predict its own input data. A large enough network will simply memorize the training set, but there are a few things that can be done to generate useful distributed representations of input data, including:

- constraining the size of the model, forcing it to learn a lower-dimensional representation that can be used to re-construct the original higher-dimensional data points.

- adding artificial noise to the initial data points, and training the autoencoder to predict the data points minus the artificial noise. See denoising autoencoder for more information.

Related Terms

- Adversarial autoencoder

- Adversarial Variational Bayes

- Contractive autoencoder (CAE)

- Denoising autoencoder

- Self-supervised learning

- Semantic hashing

- Stacked autoencoder

- Variational Autoencoder (VAE)

References

- Autoencoder - Wikipedia

(en.wikipedia.org) - Autoencoders - Deep Learning Tutorial

(deeplearning.net) - What is the difference between an autoencoder and a neural network?

(www.quora.com)

Average pooling

Backprop

See Backpropagation.Backpropagation Through Time (BPTT)

References

Backpropagation

A technique to find good weight values in a neural network by trying different weights, and seeing if the change contributes positively to prediction quality.

Synonyms

- Backprop

Related Terms

- 1-bit Stochastic Gradient Descent (1-bit SGD)

- Backpropagation Through Time (BPTT)

- Long Short-Term Memory (LSTM)

References

- Yes you should understand backprop - Andrej Karpathy

(medium.com) - Backpropagation - Wikipedia

(en.wikipedia.org)

Bag-of-n-grams

A bag-of--grams model is a way to represent a document, similar to a [bag-of-words][/terms/bag-of-words/] model.

A bag-of--grams model represents a text document as an unordered collection of its -grams.

For example, let’s use the following phrase and divide it into bi-grams ().

James is the best person ever.

becomes

<start>JamesJames isis thethe bestbest personperson ever.ever.<end>

In a typical bag-of--grams model, these 6 bigrams would be a sample from a large number of bigrams observed in a corpus. And then James is the best person ever. would be encoded in a representation showing which of the corpus’s bigrams were observed in the sentence.

A bag-of--grams model has the simplicity of the bag-of-words model, but allows the preservation of more word locality information.

Bag-of-words

The phrase bag-of-words typically refers to a way of representing text in natural language processing, although it has been applied to computer vision.

A bag-of-words representation contains how many times each word appears in a document, but disregards the order of the words.

Often, bag-of-words models will only include the most frequent words in a corpus. This reduces the memory needed to store relatively-infrequent words, and the underlying representation of the document is mostly the same because common words dominate the document.

Bag-of-words models are often highly effective at representing documents in tasks like classification, clustering, or topic modeling. But they can struggle with tasks where word order matters, like sentiment analysis and machine translation. For example, in a bag-of-words model, the phrase “dog bites man” and “man bites dog” have identical representations.

Related Terms

References

- Bag-of-words model - Wikipedia

(en.wikipedia.org) - Bag of Words Meets Bags of Popcorn - Kaggle

(www.kaggle.com)

Bagging

Bagging, short for bootstrap aggregating, is training different base learners on different subsets of the training set randomly, by drawing random training sets from the given sample (with replacement).

References

Batch normalization

Batch normalization is a technique used to improve the stability and performance of deep neural networks. It works by normalizing the input data at each layer, which allows the network to learn more effectively. Batch normalization has been shown to improve training times, accuracy, and robustness of deep neural networks.

Related Terms

References

- Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift

(arxiv.org) - Batch Normalization — What the hey?

(gab41.lab41.org) - Why does batch normalization help? - Quora

(www.quora.com) - Understanding the backward pass through Batch Normalization Layer

(kratzert.github.io)

Bayesian optimization

Related Terms

Bayesian Probabilistic Matrix Factorization (BPMF)

Beam search

Beam search is a memory-restricted version of breadth-first search.

Beam search commonly appears in machine translation or other literature where sequence-to-sequence learning is common. In this domain, beam search allows the neural network to consider many candidate responses instead of selecting the highest-scoring token at each step.

Google’s blog post announcing SyntaxNet explains the advantages of beam search for sequence-to-sequence learning in greater detail: > At each point in processing many decisions may be possible—due to ambiguity—and a neural network gives scores for competing decisions based on their plausibility. For this reason, it is very important to use beam search in the model. Instead of simply taking the first-best decision at each point, multiple partial hypotheses are kept at each step, with hypotheses only being discarded when there are several other higher-ranked hypotheses under consideration.

References

- Beam search - Wikipedia

(en.wikipedia.org) - Why is beam search required in sequence-to-sequence transduction using recurrent neural networks? - Quora

(www.quora.com)

Bias-variance tradeoff

The bias-variance tradeoff refers to the problem of minimizing two different sources of error when training a supervised learning model:

Bias - Bias is a consistent error, possibly from the algorithm having made an incorrect assumption about the training data. Bias is often related to underfitting.

Variance - Variances comes from a high sensitivity to differences in training data. Variance is often related to overfitting.

It is typically difficult to simultaneously minimize bias and variance.

References

Bias

Related Terms

References

- Bias of an estimator - Wikipedia

(en.wikipedia.org) - Bias (statistics) - Wikipedia

(en.wikipedia.org)

Bidirectional LSTM

Related Terms

Bidirectional Recurrent Neural Network (BRNN)

Bilingual Evaluation Understudy (BLEU)

Related Terms

Binary Tree LSTM

References

Bit transparency (audio)

A digital audio system satisfies bit transparency if audio data can pass through the system without being changed.

A system can fail to be bit-transparent if it performs any type of digital signal processing–such as changing the audio’s sample rate. Some audio operations–like converting audio samples from integer to float and back–can either be bit-transparent or not depending on the implementation.

An audio system can be tested for bit-transparency by giving a random sequence of bits as input and testing that the output is bit-for-bit identical to the input.

References

Black-Box optimization

Related Terms

Boltzmann machine

References

Boosting

Learners trained serially so that instances on which the preceding base learners are not accurate are given more emphasis in training later base-learners; actively tries to generate complementary learners, instead of leaving this to chance.

References

Bounding box

A bounding box is a rectangle (in 2D datasets) or rectangular prism (in 3D datasets) drawn around an object identified in an image.

Object localization is a task in computer vision where a model is trained to draw bounding boxes around object detected in an image.

Related Terms

Burstiness

Wikipedia defines burstiness as follows:

In statistics, burstiness is the intermittent increases and decreases in activity or frequency of an event. One of measures of burstiness is the Fano factor—a ratio between the variance and mean of counts.

Word Burstiness

In natural language processing, burstiness has a slightly more specific definition, defined by Slava Katz in the mid 1990s.

The authors of Accounting for Burstiness in Topic Models give the following succinct definition of burstiness:

Church and Gale (1995) note that real texts systematically exhibit this phenomenon: a word is more likely to occur again in a document if it has already appeared in the document. Importantly, the burstiness of a word and its semantic content are positively correlated; words that are more informative are also more bursty.

Additionally, burstiness also tells us that later appearances of a word are less significant than the first appearance.

If a term is used once in a document, then it is likely to be used again. This phenomenon is called burstiness, and it implies that the second and later appearances of a word are less significant than the first appearance.

Related Terms

References

Capture-recapture model

A capture-recapture model is a technique to estimate an unknown population by capturing, tagging, and re-capturing samples from the population.

In the article How many Mechanical Turk workers are there?, Panos Ipeirotis explains a simple version of a capture-recapture model as follows:

The simplest possible technique is the following:

Capture/marking phase: Capture animals, mark them, and release them back.

Recapture phase: A few days later, capture animals. Assuming there are animals overall, of them are marked. So, for each of the captured animals, the probability that the animal is marked is (from the capture/marking phase).

Calculation: On expectation, we expect to see marked animals in the recapture phase. (Notice that we do not know .) So, if we actually see marked animals during the recapture phase, we set and we get the estimate that:

He adds that this basic version of a capture-recapture model makes the following assumptions, and the estimate can be inaccurate when these assumptions are violated:

Assumption of no arrivals / departures (“closed population”): The vanilla capture-recapture scheme assumes that there are no arrivals or departures of workers between the capture and recapture phase.

Assumption of no selection bias (“equal catchability”): The vanilla capture-recapture scheme assumes that every worker in the population is equally likely to be captured.

References

Catastrophic forgetting

Catastrophic forgetting (or catastrophic interference) is a problem in machine learning where a model forgets an existing learned pattern when learning a new one.

The model uses the same parameters to recognize both patterns, and learning the second pattern overwrites the parameters’ configuration from having learned the first pattern.

References

- Overcoming catastrophic forgetting in neural networks

(arxiv.org) - Catastrophic interference - Wikipedia

(en.wikipedia.org) - Catastrophic forgetting - Standout Publishing

(standoutpublishing.com) - Catastrophic Interference in Connectionist Networks: The Sequential Learning Problem

(www.sciencedirect.com)

Categorical mixture model

Child-Sum Tree-LSTM

References

Chinese Restaurant Process

Related Terms

Chunking

The paper Natural Language Processing (almost) from Scratch describes chunking as:

Also called shallow parsing, chunking aims at labeling segments of a sentence with syntactic constituents such as noun or verb phrases (NP or VP). Each word is assigned only one unique tag, often encoded as a begin-chunk (e.g., B-NP) or inside-chunk tag (e.g., I-NP).

Clustering stability

Related Terms

Clustering

Related Terms

- Additive clustering

- Affinity propagation clustering

- Clustering stability

- Co-clustering

- Community structure

- K-Means clustering

- Nonparametric clustering

- Parametric clustering

- Unsupervised learning

Co-adaptation

In neural networks, co-adaptation refers to when different hidden units in a neural networks have highly correlated behavior.

It is better for computational efficiency and the the model’s ability to learn a general representation if hidden units can detect features independently of each other.

A few different regularization techniques aim at reducing co-adapatation–dropout being a notable one.

References

Co-clustering

Related Terms

Codebook collapse

Codebook collapse is a problem that arises when training generative machine learning models that generate outputs using a fixed-length codebook, such as the Vector-Quantized Variational Autoencoder (VQ-VAE).

In ideal scenarios, the model’s fixed-size codebook is large enough to create a diverse set of output values. Codebook collapse happens when the model only learns to use a few of the values in the codebook–artificially limiting the diversity of outputs that the model can generate.

Codebook collapse is analogous to mode collapse, another problem commonly faced when training generative models.

Related Terms

Codebook

A codebook is a fixed-size table of embedding vectors learned by a generative model such as a vector-quantized variational autoencoder (VQ-VAE).

Generative models typically encode inputs into -dimensional embedding vectors (for some ) in a continuous vector space of dimension . Then, these generative models learn to decode these embedding vectors into a desired output.

Generative models that use a codebook–like the VQ-VAE–discretize embedding vectors by outputting the closest vector in the codebook. This restricts the potential number of outputs if the model only emits a single codebook value, but by having the model emit a sequence vectors from the codebook, it is possible for a small codebook to generate an enormous number of possible values.

Models like the VQ-VAE also learn the codebook values via gradient descent–just like how the model’s encoder and decoder are learned via gradient descent.

Related Terms

References

Collaborative filtering

Related Terms

Collaborative Topic Regression (CTR)

Community detection

Community detection refers to the problem of detecting whether a graph has community structure.

Community structure

Related Terms

References

Computer vision

Computer vision is the field of teaching computers to perceive sensor data–such as from cameras, RADAR, and LIDAR sensors–to achieve an understanding of what is in the data.

Computer vision is a wide-ranging field that comprises of many techniques and subfields.

Related Terms

- Anchor box

- Neural style transfer

- Non-max suppression

- Object detection

- Object localization

- YOLO (object detection algorithm)

References

Conditional GAN

Conditional Markov Models (CMMs)

Conditional Random Fields (CRFs)

References

Confusion matrix

Connectionism

References

Connectionist Temporal Classification (CTC)

Connectionist Temporal Classification is a term coined in a paper by Graves et al. titled Connectionist Temporal Classification: Labelling Unsegmented Sequence Data with Recurrent Neural Networks.

It refers to the use of recurrent neural networks (which is a form of connectionism) for the purpose of labeling unsegmented data sequences (AKA temporal classification).

References

Constituency Tree-LSTM

References

Contextual Bandit

References

- Contextual Bandit - Multi-armed Bandit - Wikipedia

(en.wikipedia.org) - An Introduction to Contextual Bandits - Stream

(getstream.io)

Continuous-Bag-of-Words (CBOW)

Continuous Bag of Words refers to a algorithm that predicts a target word from its surrounding context.

CBOW is one of the algorithms used for training word2vec vectors.

Related Terms

Contractive autoencoder (CAE)

Convex combination

A convex combination is a linear combination, where all the coefficients are greater than 0 and sum to 1.

The Convex combination Wikipedia article gives the following example:

Given a finite number of points in a real vector space, a convex combination of these points is a point of the form

is a convex combination if all real numbers and

Related Terms

References

Convex hull

The convex hull of a set in an affine space over the reals is the smallest convex set that contains . When the points are two dimensional, the convex hull can be thought of as the rubber band around the points of .

As per Wikipedia, a convex set is the smallest affine space closed under convex combination.

A convex combination is a linear combination where all the coefficients are greater than 0 and all sum to 1.

Related Terms

References

- Convex hull - Wikipedia

(en.wikipedia.org) - Convex set - Wikipedia

(en.wikipedia.org) - Convex combination - Wikipedia

(en.wikipedia.org)

Convex optimization

Related Terms

Convolution

Convolution is an operation where a filter (a small matrix) is applied to some input (typically a much larger matrix).

Convolution is a common operation in image processing, but convolution has also found some applications in natural language processing, audio processing, and other fields of machine learning.

Padding

Padding is a preprocessing step before a convolution operation. The input matrix is often padded to control the output dimensions of the convolution, or ot preserve information around the edges of the input matrix.

Stride

Stride is the number of steps the filter takes in the convolution operation.

Calculating the output dimensions of a convolution

For example, let’s say we have matrix and a filter . The output dimension depends on two parameters – padding and stride .

The dimensions for the output matrix will be

.

In a same convolution, and . The matrix gets padded to $ n + p n + p$ and the output matrix becomes .

Related Terms

- 1x1 Convolution

- Convolutional Neural Networks (CNN)

- Narrow convolution

- One-dimensional convolution

- Padding (convolution)

- Same convolution

- Sobel filter (convolution)

- Stride (convolution)

- Valid convolution

- Wide convolution

Convolutional Neural Networks (CNN)

Related Terms

- 1x1 Convolution

- AlexNet

- Anchor box

- Deep Convolutional Generative Adversarial Network (DCGAN)

- Dynamic k-Max Pooling

- Fast R-CNN

- GoogLeNet

- Inception

- Inceptionism

- k-Max Pooling

- LeNet

- Narrow convolution

- Neural style transfer

- Padding (convolution)

- Pooling layer

- R-CNN

- ResNet

- Same convolution

- Sobel filter (convolution)

- Stride (convolution)

- Valid convolution

- VGGNet

- Wide convolution

- YOLO (object detection algorithm)

- YOLOv2 (object detection algorithm)

- Zero padding

References

- Convolutional neural network - Wikipedia

(en.wikipedia.org) - Stanford CS231n Convolutional Neural Networks for Visual Recognition

(cs231n.github.io) - Understanding convolutional neural networks for NLP - WildML

(www.wildml.com)

Coreference resolution

The Stanford NLP group defines coreference resolution as:

Coreference resolution is the task of finding all expressions that refer to the same entity in a text. It is an important step for a lot of higher level NLP tasks that involve natural language understanding such as document summarization, question answering, and information extraction.

Coreference resolution should not be confused with Named Entity Recognition, which is focused on labeling sequences of text that refer to entities–but not focused on linking those entities together.

Related Terms

Cosine similarity

Covariance

Covariate shift

Related Terms

References

Cross-Entropy loss

Cross validation

Cross-validation refers to a group of techniques for dividing a dataset into multiple pieces.

Many methods of cross validation boil down to some variation of the following steps:

- Split the input dataset into a training dataset and a validation dataset.

- Train a model on the training dataset.

- Measure the trained model’s accuracy against the validation set.

- Repeat the above steps with different training/validation splits, allowing you to measure the variance in validation accuracy caused by different splits.

For example, -fold cross validation is a more general version of the above procedure:

- The input dataset is divided into shards.

- Each individual shard is used as a separate validation dataset, measuring the performance of a model trained against the other shards.

Related Terms

References

- Cross-validation (statistics)

(en.wikipedia.org) - A Gentle Introduction to k-fold Cross-Validation

(machinelearningmastery.com)

Data augmentation

Data augmentation is the process of using computer algorthms or other synthetic means to increase the size of a collected dataset.

Machine learning algorithms typically become more resistant to overfitting when they are trained with more data. But in many cases, it can be expensive to collect more data.

It is often possible to significantly increase the size of a dataset by computing simple transformations that are unlikely to be learned by the model, but also do not change the value of the labels.

For example, if one were building a model to identify pictures of cats versus non-cats, flipping the pictures horizontally or vertically increases the size of the available image data. When the pictures are flipped, whether they contain a cat or not doesn’t change. Despite this, many statistical models would be unlikely to learn flip-invariance without a significant amount of data–either collected from the real-world or generated through data augmentation.

Related Terms

References

- Data Augmentation - Convolutional Neural Networks - deeplearning.ai

(www.coursera.org) - Understanding data augmentation for classification: when to warp?

(arxiv.org) - What you need to know about data augmentation for machine learning - Cartesian Faith

(cartesianfaith.com)

Data parallelism

Data parallelism is when data is distributed across multiple nodes in a distributed computing environment, and then each node acts on the data in parallel.

On each node, the computation is the same, but the data is different.

Related Terms

References

- Data parallelism - Wikipedia

(en.wikipedia.org) - What is the difference between model parallelism and data parallelism? - Quora

(www.quora.com)

Decision tree

A supervised learning method that iteratively refines a prediction by asking questions about the input feature most likely to affect the outcome, making a ‘tree’ of question branches.

Related Terms

References

Deep Convolutional Generative Adversarial Network (DCGAN)

DCGAN refers to a model described by Radford, Metz, and Chintala that uses deep convolutional neural networks in a generative adversarial network model.

Generative adversarial networks (GANs) are structured as a competition between two models:

- a generative model that tries to create fake examples of training data interspersed with real training data.

- a discriminative model that tries to classify real examples from fake ones.

DCGAN uses deep convolutional neural networks for both models. Convolutional neural networks (CNNs) are well-known for their performance on image data. DCGAN uses the strong performance of (CNNs) to learn unsupervised representations of the input data.

References

Deep Learning

Deep Learning is about learning using neural networks with multiple [hidden layers][2].

References

Denoising autoencoder

Dependency Tree LSTM

References

Derivative-free optimization

References

- Derivative-free optimization - Wikipedia

(en.wikipedia.org) - Derivative-free optimization: A review of algorithms and comparison of software implementations

(thales.cheme.cmu.edu)

Differential Evolution (DE)

Related Terms

References

Differential Topic Modeling

Dimensionality reduction

Dimensionality reduction is about taking a set of data, and reducing its number of dimensions in such a way as to balance information size and independence of the features’ information. The point is to get a smaller dataset that still retains most of the original information.

References

Dirichlet-multinomial distribution

References

Dirichlet process

Distance metric learning

Distance metric learning is the task of using a labeled dataset to learn a similarity learning where the similarity function has to obey the four axioms of a distance metric.

The problem is typically defined with a dataset where there some of the datapoints are known to be “similar” and should be closer to each other than another arbitrarily-chosen datapoint in the dataset.

Distance metric learning first received significant attention in the machine learning community from the 2002 NIPS paper titled Distance metric learning, with application to clustering with side-information.

Axioms of a distance metric

The four axioms of a distance metric are:

- Non-negativity: – The distance must always be greater than zero.

- Identity of indiscernibles: – The distance must be zero for two elements that are the same (i.e. indiscernible from each other).

- Symmetry: – The distances must be the same, no matter which order the parameters are given.

- Triangle inequality: – For three elements in the set, the sum of the distances for any two pairs must be greater than the distance for the remaining pair.

Related Terms

References

- Distance Metric Learning: A Comprehensive Survey

(www.cs.cmu.edu) - Distance metric learning, with application to clustering with side-information

(ai.stanford.edu)

Distance metric

As per Wikipedia, a distance metric, metric, or distance function, “is a function that defines a distance between each pair of elements of a set.”

A distance metric requires the following four axioms to be true for all elements , , and in a given set.

- Non-negativity: – The distance must always be greater than zero.

- Identity of indiscernibles: – The distance must be zero for two elements that are the same (i.e. indiscernible from each other).

- Symmetry: – The distances must be the same, no matter which order the parameters are given.

- Triangle inequality: – For three elements in the set, the sum of the distances for any two pairs must be greater than the distance for the remaining pair.

Related Terms

References

Distributed representation

In machine learning, data with a local representation typically has 1 unit per element. A 5-word vocabulary might be defined by a 5-dimensional vector, with denoting the first word, denoting the second word, and so forth.

Distributed representations are the opposite, instead of concentrating the meaning of a data point into one component or one “element”, the meaning of the data is distributed across the whole vector.

The word that is in a local representation might look like in a distributed representation.

Related Terms

References

- Distributed Representation - Directory of Cognitive Science

(www.bcp.psych.ualberta.ca) - Local and distributed representations - Programming Methods for Cognitive Science

(www.indiana.edu)

Distributional similarity

Distributional similarity is the idea that the meaning of words can be understood from their context.

This should not be confused with the term distributed representation, which refers to the idea of representing information with relatively dense vectors as opposed to a one-hot representation.

References

- Distributional semantics - Wikipedia

(en.wikipedia.org) - word2vec - Stanford CS224N Lecture 2

(www.youtube.com)

doc2vec

doc2vec is the gensim library’s name for its paragraph vector implementation. doc2vec can be used to generate unsupervised representations of sentences, paragraphs, and documents.

Related Terms

Domain adaptation

References

Dropout

Dropout or dilution is a technique for regularizing neural networks, developed by Hinton et al. and published in the paper Improving neural networks by preventing co-adaptation of feature detectors.

The core idea behind dropout is to randomly set some of the weights in a neural network to during the training phase.

Dropout add a hyperparameter of a “keep probability”. This is the probability that a weight value is left undisturbed– that it will not be set to .

Dropout can be considered analogous to model-averaging because the process simulates training many similar neural networks on the same data.

Related Terms

References

- Dilution (neural networks) - Wikipedia

(en.wikipedia.org) - How does the dropout method work in deep learning? - Quora

(www.quora.com) - Improving neural networks by preventing co-adaptation of feature detectors

(arxiv.org)

Dying ReLU

Dying ReLU refers to a problem when training neural networks with rectified linear units (ReLU). The unit dies when it only outputs 0 for any given input.

When training with stochastic gradient descent, the unit is not likely to return to life, and the unit will no longer be useful during training.

Leaky ReLU is a variant that solves the Dying ReLU problem by returning a small value when the input is less than 0.

References

Dynamic k-Max Pooling

Related Terms

References

Early stopping

Embed, Encode, Attend, Predict

The phrase Embed, Encode, Attend, Predict refers to Matthew Honnibal’s conceptual framework for deep learning for natural language processing.

The steps have the following meanings:

Embed – This is the process of turning text or sparse vectors into dense word embeddings. These embeddings are much easier to work with than other representations, and do an excellent job of capturing semantic information.

Encode – This is the process of encoding a sequence of word vectors into a matrix, using techniques like recurrent neural networks or LSTMs.

Attend – This refers to taking the matrix from the Encode step and transforming it into a vector, most likely using an attention mechanism.

Predict – The final step in the Natural Language Processing pipeline is making a prediction given the input text.

References

Entailment

The word entailment has many meanings. Please review Wikipedia’s disambiguation page for “entail” for definitions that do not refer to textual entailment.

Textual entailment refers to drawing a relation from an entailing text to an entailed hypothesis.

The Wikipedia page for textual entailment gives the following examples:

Textual entailment can be illustrated with examples of three different relations:

An example of a positive TE (text entails hypothesis) is:

- text: If you help the needy, God will reward you.

- hypothesis: Giving money to a poor man has good consequences.

An example of a negative TE (text contradicts hypothesis) is:

- text: If you help the needy, God will reward you.

- hypothesis: Giving money to a poor man has no consequences.

An example of a non-TE (text does not entail nor contradict) is:

- text: If you help the needy, God will reward you.

- hypothesis: Giving money to a poor man will make you a better person.

Synonyms

- Textual entailment

References

- Entailment (linguistics) - Wikipedia

(en.wikipedia.org) - Textual entailment - Wikipedia

(en.wikipedia.org) - A machine learning approach to textual entailment recognition

(disi.unitn.it) - Recognizing Textual Entailment

(l2r.cs.uiuc.edu)

Error-Correcting Tournaments

References

Expectation-maximization (EM) algorithm

References

Expectation

Related Terms

Exploding gradient problem

Related Terms

Exponential Linear Unit (ELU)

References

- Fast and Accurate Deep Network Learning by Exponential Linear Units (ELUs)

(arxiv.org) - Deep Residual Networks with Exponential Linear Unit

(arxiv.org)

Extractive sentence summarization

Extractive sentence summarization refers to programmatically creating a shorter version of a document by extracting the “important” parts.

TextRank is an example of an algorithm that can rank sentences in a document for the purpose of extractive summarization.

References

Face detection

Face detection is the problem of detecting wheter an image has a (usually human) face in it.

The problem of identifying whether the image has a specific single person’s face is called face verification. The problem of identifying whether the image has any of person’s faces is called face recognition.

Related Terms

Face recognition

Face recognition is the problem of identifying whether an input image contains the faces of any of people… or if the image has none of the faces.

Face recognition is a harder problem than face verification because face verification only compares a single image to one person, whereas face recognition does this for people.

Related Terms

References

Face verification

Face verification is the problem of identifying whether an image belongs to a person–given the one image and one person as the input.

Face verification is an easier problem than face recognition because face verification only compares a single image to one person, whereas face recognition does this for people.

Related Terms

References

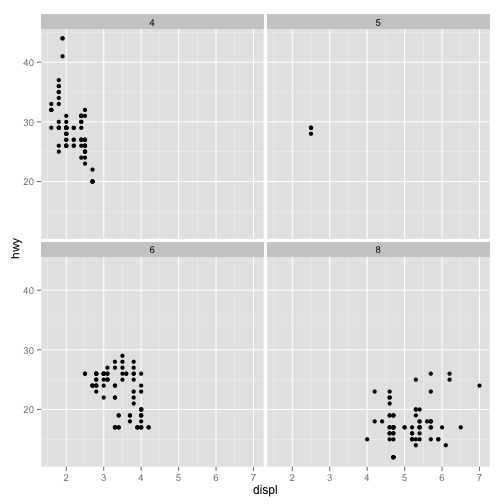

Facet

In statistical plotting, a facet is a type of plot. Data is split into subsets and the subsets are plotted in a row or grid of subplots.

The term is common among users of ggplot2, a plotting package for the R statistical computing language.

Facet is also the name of a plotting and visualizaton tool created by the People + AI Research (PAIR) initiative at Google.

References

Facet (disambiguation)

A facet can refer to a type of plot or chart designed to efficiently display multidimensional data.

Facets is also the name of a tool from Google’s People + AI Research (PAIR) lab designed to help explore datasets.

Facet (visualization tool)

Facets is a a plotting and visualizaton tool created by the People + AI Research (PAIR) initiative at Google.

Facets is broken into two tools with the following goals:

- Facets Overview – summarize statistics for features collected from datasets

- Facets Dive – explore the relationship between different features in a dataset

From the Facets homepage, they state that

Success stories of (Facets) Dive include the detection of classifier failure, identification of systematic errors, evaluating ground truth and potential new signals for ranking.

Related Terms

References

Fast Fourier transform (FFT)

References

Fast R-CNN

References

fastText

fastText is a project from Facebook Research for producing word embeddings and sentence classification.

The fastText project is hosted on Github and instructions for using their pre-trained word embeddings can be found here.

Feature learning

See Representation learning.Filter (convolution)

A filter (also known as a kernel) is a small matrix used in convolution operations.

Convolution filters are commonly used in image processing to modify images or extract features.

The dimensions of a convolution filter are typically small, odd, and square. For example, convolution filters are typically or matrices. Odd dimensions are preferred to even dimensions.

Synonyms

- Kernel (convolution)

Related Terms

References

Finite-state transducer (FST)

Related Terms

References

First-order information

First-order information is a term used to mean information obtained by computing the first derivative of a function. The first derivative of a function reveals the slope of a tangent line to the function. This gives a general idea of how the function is changing at that point, but does not give information about the curvature of the function–the second derivative is required for that.

First-order information should not be confused with first-order logic.

Related Terms

Gap statistic

References

Gaussian mixture model (GMM)

Related Terms

References

Generalized additive model (GAM)

References

Generative Adversarial Network (GAN)

A generative adversarial network (GAN) is a framework for training neural networks–often for the purpose of training neural networks to artificially generate novel data samples.

A GAN is structured as a game between two neural networks:

- a discriminator network that distinguishes between real-world data samples and artificially generated imitation data samples.

- a generator network that learns to create artificial data that is realistic enough to fool the discriminator.

A GAN is successfully trained when both of these goals are achieved:

- The generator can reliably generate data that fools the discriminator.

- The generator generates data samples that are as diverse as the distribution of the real-world data.

When the generator achieves both goals #1 and #2, it is theoretically capable of “perfect” artifiical data that no human or discriminative model could distinguish as fake.

However, in practice, there are many challenges to training a GAN to the point where the generator can produce realistic samples. For exmaple, many GANs suffer from mode collapse, where the generator network gets “stuck” generating only a few data samples.

Ian Goodfellow originally proposed GANs in a 2014 paper, originally formulating the GAN loss function as a two-player minimax game between the generator and discriminator. Since then, newer GAN variants have altered the loss function in other ways, such as using the Wasserstein loss function.

Related Terms

- Adversarial autoencoder

- Adversarial Variational Bayes

- Deep Convolutional Generative Adversarial Network (DCGAN)

- Minimax

- Mode collapse

- Neural style transfer

- Temporal Generative Adversarial Network (TGAN)

- Variational Autoencoder (VAE)

- Vector-Quantized Variational Autoencoders (VQ-VAE)

References

- Generative adversarial networks - Wikipedia

(en.wikipedia.org) - Generative adversarial networks

(arxiv.org) - NIPS 2016 tutorial: Generative Adversarial Networks

(arxiv.org)

Gibbs sampling

Related Terms

Global Average Pooling (GAP)

References

GloVe (Global Vectors) embeddings

GloVe, or Global Vectors, refers to a word embedding algorithm from the Stanford NLP group.

Related Terms

References

- GloVe: Global Vectors for Word Representation - Stanford NLP Group

(nlp.stanford.edu) - GloVe - Stanford NLP project page

(nlp.stanford.edu)

GoogLeNet

Related Terms

Gradient Clipping

References

- Gradient Clipping - Tensorflow Python Documentation

(www.tensorflow.org) - On the difficulty of training Recurrent Neural Networks

(arxiv.org)

Gradient descent

Gradient descent is an optimization algorithm designed to find the minimum of a function. Many machine learning algorithms use gradient descent or a variant.

Common variants include: - Stochastic Gradient Descent (SGD) - Minibatch Gradient Descent

Related Terms

Gradient

The gradient is the vector generalization of the derivative.

For a function , the gradient is the vector containing the partial derivatives of with respect to each .

Related Terms

Gram matrix

Wolfram Mathworld defines Gram matrix as:

Given a set of vectors (points in ), the Gram matrix is the matrix of all possible inner products of , i.e.,

References

- Gramian matrix - Wikipedia

(en.wikipedia.org) - Gram Matrix - Wolfram Mathworld

(mathworld.wolfram.com) - Gram matrix - Encyclopedia of Mathematics

(www.encyclopediaofmath.org)

Graph Neural Network

References

Graph

Related Terms

Grid search

Hadamard product

The Hadamard product refers to component-wise multiplication of the same dimension. The symbol is commonly used as the Hadamard product operator.

Here is an example for the Hadamard product for a pair of matrices.

Hamming distance

Harmonic Precision-Recall Mean (F1 Score)

The score is a classification accuracy metric that combines precision and recall. It is designed to be useful metric when classifying between unbalanced classes or other cases when simpler metrics could be misleading.

When classifying between two cases (“positive” and “negative”), there are the four possible results of prediction:

| Actual Positive | Actual Negative | |

| Predicted Positive | True Positives | False Positives |

| Predicted Negative | False Negatives | True Negatives |

Precision answers the question, “What fraction of positive predictions are true predictions?”

A cancer diagnostic test that suggested that all patients have cancer would achieve

Recall answers the question, “Out of all the true positives, what fraction of them did we identify?”

A cancer diagnostic test that suggested that all patients have cancer would achieve perfect recall, as all patients that actually have cancer would be identified.

The F1 score is a way to combine precision and recall in the following way:

For a classifier to have a high score, it needs to have high precision and high recall.

References

He initialization

The term He initialization refers to the first author in the paper “Delving Deep into Rectifiers: Surpassing Human-Level Performance on ImageNet Classification”.

He initialization initializes the bias vectors of a neural network to and the weights to random numbers drawn from a Gaussian distribution where the mean is and the variance is where is the dimension of the previous layer.

References

Helvetica scenario

See Mode collapse.Hessian-free optimization

Related Terms

References

- Hessian Free Optimization - Andrew Gibiansky

(andrew.gibiansky.com) - Deep learning via Hessian-free optimization

(www.cs.toronto.edu) - Hessian-free Optimization for Learning Deep Multidimensional Recurrent Neural Networks

(arxiv.org)

Hessian matrix

Related Terms

Hidden Markov Models (HMMs)

Hierarchical Dirichlet process (HDP)

Related Terms

Hierarchical Latent Dirichlet allocation (hLDA)

Hierarchical Softmax

Related Terms

Hinge loss

From the scikit-learn documentation, we get the following definition:

The hinge_loss function computes the average distance between the model and the data using hinge loss, a one-sided metric that considers only prediction errors. (Hinge loss is used in maximal margin classifiers such as support vector machines.)

Hopfield Network

A Hopfield network (HN) is a type of recurrent neural network(RNN). The HNs have only one layer, with each neuron connected to every other neuron: All neurons act as input and output. The model of the network consists of a set of neurons and corresponding set of unit delays, forming a multiple loop feedback system. The output of a neuron is, via a unit delay element, sent to all of the others neurons in the HN, except itself.

Some important properties of HNs are that all neurons have binary outputs (either 0, 1 or -1, 1), it isn’t allowed a connection from a neuron to itself, the neurons are updated at random order (asynchronously), and the weights between the neurons are symmetric. This last property guarantees that the energy function decreases while following the activation function rules, and HNs are guaranteed to converge to a local minimum.

Hopfield networks are used for pattern recognition, and provides a model for understanding human memory. A HN is initially trained to store a number of patterns, and then it’s able to recognize any of the learned patterns by exposure to only partial or even corrupted information about the pattern (it returns the closest pattern or the best guess). This property makes the Hopfield network a form of associative memory (or content-addressable memory). It’s applications include image detection and recognition, enhancement of X-Ray images, medical images restoration, etc.

Related Terms

References

Hypergraph

A hypergraph is a generalization of the graph. A graph has edges that connect pairs of vertices, but a hypergraph has hyperedges that can connect any number of vertices.

References

- Hypergraph - Wikipedia

(en.wikipedia.org) - Learning with Hypergraphs: Clustering, Classification, Embedding

(papers.nips.cc) - What are the applications of hypergraphs? - Math Overflow

(mathoverflow.net)

Hypernetwork

References

Hypernym

Related Terms

Hyperparameter

Related Terms

Hyponym

Related Terms

Identity mapping

Importance sampling

Imputation

Imputation means replacing missing data values with substitute values. There are several ways to do this such as choosing from a random distribution to avoid bias or by replacing the missing value with the mean or median of that column.

References

- 6 Different Ways to Compensate for Missing Values In a Dataset (Data Imputation with examples) - Will Badr

(towardsdatascience.com) - Imputation (statistics)

(en.wikipedia.org) - Defining, Analysing, and Implementing Imputation Techniques - Shashank Singhal

(scikit-learn.org)

Inception

Inception refers to a particular neural network model in the CVPR 2015 paper titled Going Deeper With Convolutions.

Related Terms

References

- google/inception - Github

(github.com) - Going Deeper With Convolutions

(arxiv.org) - Inceptionism: Going Deeper into Neural Networks

(research.googleblog.com) - How does the Inception module work in GoogLeNet deep architecture?

(www.quora.com) - Inception in Tensorflow - Github

(github.com) - Rethinking the Inception Architecture for Computer Vision

(arxiv.org) - Inception-v4, Inception-ResNet and the Impact of Residual Connections on Learning

(arxiv.org)

Inceptionism

Inceptionism refers to a visualization technique to understand what a neural network learned. The network is fed an image, asked what the network detected, and then that feature in the image is amplified. The full technique is described in the Google Research blog post titled Inceptionism: Going Deeper into Neural Networks.

Independent and Identically Distributed (i.i.d)

A collection of random variables is independent and identically distributed if they have these properties:

- they all have the same probability distribution.

- they are all mutually independent of each other.

Indian Buffet Process

References

Internal covariate shift

The term interal covariate shift comes from the paper Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift.

The authors’ precise definition is:

We define Internal Covariate Shift as the change in the distribution of network activations due to the change in network parameters during training.

In neural networks, the output of the first layer feeds into the second layer, the output of the second layer feeds into the third, and so on. When the parameters of a layer change, so does the distribution of inputs to subsequent layers.

These shifts in input distributions can be problematic for neural networks, especially deep neural networks that could have a large number of layers.

Batch normalization is a method intended to mitigate internal covariate shift for neural networks.

References

Inverted dropout

Inverted dropout is a variant of the original dropout technique developed by Hinton et al.

Just like traditional dropout, inverted dropout randomly keeps some weights and sets others to zero. This is known as the “keep probability” .

The one difference is that, during the training of a neural network, inverted dropout scales the activations by the inverse of the keep probability .

This prevents network’s activations from getting too large, and does not require any changes to the network during evaluation.

In contrast, traditional dropout requires scaling to be implemented during the test phase.

References

Jaccard index (intersection over union)

The Jaccard index–otherwise known as intersection over union–is used to calculate the similarity or difference of sample sets.

The index is defined to be 1 if the sets are empty.

Related Terms

Jacobian matrix

Related Terms

k-Max Pooling

Related Terms

References

K-Means clustering

Related Terms

Kernel (convolution)

See Filter (convolution).Kullback-Leibler (KL) divergence

Language segmentation

This phrase is most concisely described in in this work by David Alfter:

Language segmentation consists in finding the boundaries where one language ends and another language begins in a text written in more than one language. This is important for all natural language processing tasks.

[…]

One important point that has to be borne in mind is the difference between language identification and language segmentation. Language identification is concerned with recognizing the language at hand. It is possible to use language identification for language segmentation. Indeed, by identifying the languages in a text, the segmentation is implicitly obtained. Language segmentation on the other hand is only concerned with identifying language boundaries. No claims about the languages involved are made.

Laplacian matrix

Related Terms

Latent Dirichlet Allocation Differential Evolution (LDADE)

LDADE is a tool proposed by Agrawal et al. in a paper titled What is Wrong with Topic Modeling? (and How to Fix it Using Search-based Software Engineering).

It tunes LDA parameters using Differential Evolution to increase the clustering stability of standard LDA.

References

Latent Dirichlet allocation (LDA)

Related Terms

- Dirichlet process

- Hierarchical Dirichlet process (HDP)

- Hierarchical Latent Dirichlet allocation (hLDA)

- Latent Dirichlet Allocation Differential Evolution (LDADE)

- Latent Semantic Indexing (LSI)

- Pitman-Yor Topic Modeling (PYTM)

- Termite

Latent semantic analysis (LSA)

See Latent Semantic Indexing (LSI).Latent Semantic Indexing (LSI)

Synonyms

- Latent semantic analysis (LSA)

Related Terms

References

- Latent semantic indexing - Wikipedia

(en.wikipedia.org) - What is latent semantic indexing? - Search Engine Journal

(www.searchenginejournal.com) - Latent Semantic Indexing - Introduction to Information Retrieval

(nlp.stanford.edu)

Leaky ReLU

Leaky ReLU is a type of activation function that tries to solve the Dying ReLU problem.

A traditional rectified linear unit returns 0 when . The Dying ReLU problem refers to when the unit gets stuck this way–always returning 0 for any input.

Leaky ReLU aims to fix this by returning a small, negative, non-zero value instead of 0, as such:

where is typically a small value like .

Related Terms

Learning rate annealing

See Learning rate decay.Learning rate decay

Synonyms

- Learning rate annealing

Related Terms

References

- Decaying the learning rate - TensorFlow Documentation

(www.tensorflow.org) - Annealing the learning rate - Stanford CS231n

(cs231n.github.io)

Learning rate

Related Terms

Learning To Rank (LTR)

Learning-to-rank is the application of machine learning to ranking search results, recommendations, or similar information.

References

- Learning to rank - Wikipedia

(en.wikipedia.org) - Learning To Rank - Apache Solr Documentation

(cwiki.apache.org)

LeNet

LeNet was an early convolutional neural network proposed by Lecun et al in the paper Gradient-Based Learning Applied to Document Recognition.

LeNet was designed for handwriting recognition. Many modern convolutional neural network architectures are inspired by LeNet.

Related Terms

References

- Case Studies - Stanford CS231n Convolutional Neural Networks

(cs231n.github.io) - Gradient-Based Learning Applied to Document Recognition

(yann.lecun.com)

Lexeme

Likelihood

In statistics, likelihood is the hypothetical probability that a past event would yield a specific outcome. Probability is concerned with the future, but likelihood is concerned with the past.

Linear discriminant analysis (LDA)

Long Short-Term Memory (LSTM)

Long short term memory (LSTM) networks try to reduce the vanishing and exploding gradient problem during the backpropagation in recurrent neural networks. LSTM are in general, a RNN where each neuron has a memory cell and three gates: input, output and forget. The purpose of the memory cell is to retain information previously used by the RNN, or forget if needed. LSTMs are explicitly designed to avoid the long-term dependency problem in RNNs, and have been shown to be able to learn complex sequences better than simple RNNs.

The structure of a memory cell is: an input gate, that determines how much of information from the previous layer gets stored in the cell; the output gate, that determines how of the next layer gets to know about the state of the current cell; and the forget gate, which determines what to forget about the current state of the memory cell.

Related Terms

- Bidirectional LSTM

- Binary Tree LSTM

- Child-Sum Tree-LSTM

- Constituency Tree-LSTM

- Dependency Tree LSTM

- Gradient Clipping

- Multilayer LSTM

- N-ary Tree LSTM

- Peephole connection

- Sequence to Sequence Learning (seq2seq)

- Tree-LSTM

References

Loss function

Related Terms

Magnet loss

Magnet loss is a type of loss function used in distance metric learning machine learning problems. It was introduced in the paper Metric Learning with Adaptive Density Discrimination. The authors of the paper introduced magnet loss as an improvement over triplet loss and other loss functions designed to learn a distance metric.

Instead of working on individuals, pairs, or triplets of data points, magnet loss operates on entire regions of the embedding space that the data points inhabit. Magnet loss models the distributions of different classes in the embedding space and works to reduce the overlap between distributions.

References

- Metric Learning with Adaptive Density Discrimination

(arxiv.org) - Significance of Softmax-based Features in Comparison to Distance Metric Learning-based Features

(arxiv.org)

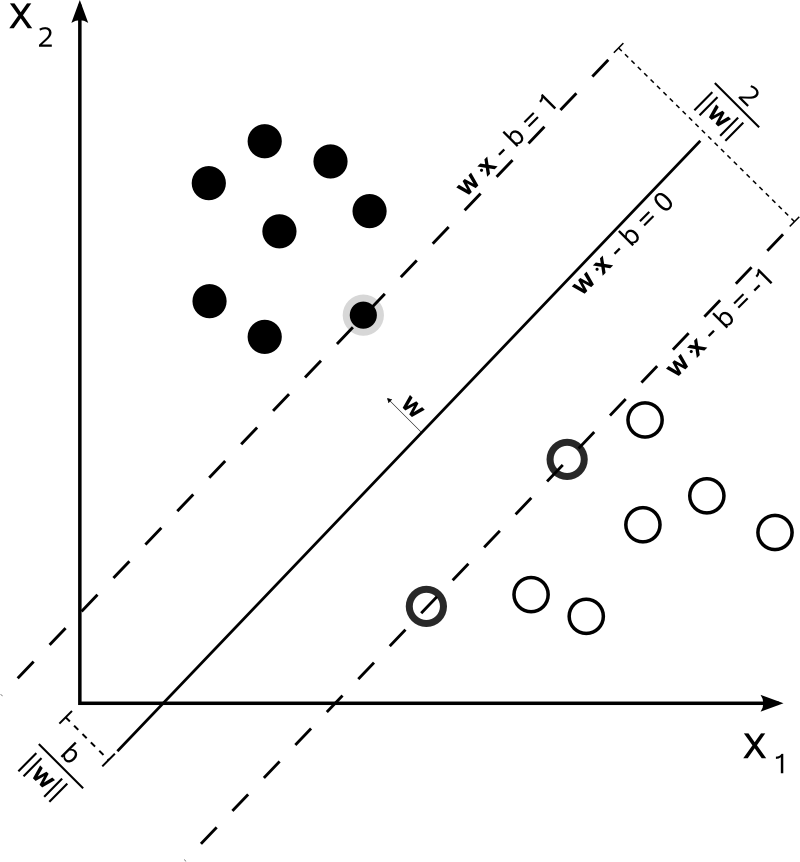

Margin

In machine learning, a margin often refers to the distance between the two hyperplanes that separate linearly-separable classes of data points.

The term is most commonly used when discussing support vector machines, but often appears in other literature discussing boundaries between points in a vector space.

References

Market basket analysis

Markov Chain Monte Carlo (MCMC)

Related Terms

Max-margin loss

Max Pooling

Related Terms

Maximum A Posteriori (MAP) Estimation

Related Terms

Maximum Likelihood Estimation (MLE)

Related Terms

Maxout

References

Mean Reciprocal Rank (MRR)

Mean Reciprocal Rank is a measure to evaluate systems that return a ranked list of answers to queries.

For a single query, the reciprocal rank is where is the position of the highest-ranked answer ( for answers returned in a query). If no correct answer was returned in the query, then the reciprocal rank is 0.

For multiple queries , the Mean Reciprocal Rank is the mean of the reciprocal ranks.

References

- Chapter 14: Question Answering and Information Retrieval - Speech and Language Processing

(web.stanford.edu) - Mean reciprocal rank - Wikipedia

(en.wikipedia.org)

Mention-pair coreference model

References

Mention-ranking coreference model

Related Terms

References

Meronym

Meta learning

References

METEOR

METEOR is an automatic evaluation metric for machine translation, designed to mitigate perceived weaknesses in BLEU. METEOR scores machine translation hypotheses by aligning them to reference translations, much like BLEU does.

References

Minibatch Gradient Descent

Synonyms

- Mini-Batching

Mini-Batching

Minimal matching distance

Minimax

Minimax is a way of modeling the possible scores in an -player games. Minimax is commonly used in computer science and game theory to model outcomes from interactions between different players.

Computer programs that play games (such as chess) will typically build a tree of possible future moves from all of the players in the game. Such programs will use the minimax rule to model potential outcomes of the game, modeling each turn of the game with the following assumptions: - all other players make the most-advantageous move modeled in the game tree. - the computer program makes the least-advantageous move in the game tree.

Note that the term “minimax” assumes that the computer program’s goal is to minimize the total score. If game players win by maximizing a score, the equivalent term is maximin.

Generative adversarial networks (GANs) were originally proposed as a minimax zero-sum two-player game between a generative model and a discriminative model.

References

Minimum description length (MDL) principle

References

Mixed-membership model

Mode collapse

Mode collapse, also known as catastrophic collapse or the Helvetica scenario, is a common problem when training generative adversarial networks (GANs).

A GAN is a framework for creatively and artificially generating novel data samples, structured as a zero-sum (minimax) game between two neural networks:

- a discriminator network that distinguishes between real-world data samples and artificially generated imitation data samples.

- a generator network that learns to create artificial data that is realistic enough to fool the discriminator.

A GAN is successfully trained when both of these goals are achieved:

- The generator can reliably generate data that fools the discriminator.

- The generator generates data samples that are as diverse as the distribution of the real-world data.

Mode collapse happens when the generator fails to achieve Goal #2–and all of the generated samples are very similar or even identical.

The generator may “win” by creating one realistic data sample that always fools the discriminator–achieving Goal #1 by sacrificing Goal #2.

Partial mode collapse happens when the generator produces realistic and diverse samples, but obviously much less diverse than the real-world data distribution. For example, when training a GAN to generate human faces, the generator might succeed in producing a diverse set of male faces but fail to generate any female faces.

Solutions to mode collapase

- Wasserstein loss. Formulates the GAN loss functions to more directly represent minimizing the distance between two probability distributions. The Wasserstein loss is designed to fix an issues caused by the original GAN loss functions being designed as a zero-sum (minimax) game. Problems like mode collapse happen because the generator winning a turn in the game does not correlate with actually reducing the distances between the generated and real-world probability distributions.

- Unrolling. Updating the generator’s loss function to backpropagate through steps of gradient updates for the discriminator. This lets the generator “see” steps into the “future”–which hopefully encourages the generator to produce more diverse and realistic samples.

- Packing. Modifying the discriminator to make decisions based on multiple samples all of the same class–either real or artificial. When the discriminator looks at a pack of samples at once, it has a better chance of identifying an un-diverse pack as artificial.

Synonyms

- Helvetica scenario

Related Terms

Model averaging

Related Terms

Model compression

Model parallelism

Model parallelism is where multiple computing nodes evaluate the same model with the same data, but using different parameters or hyperparameters.

In contrast to model parallelism, data parallelism where the different computing nodes have the same parameters but different data.

References

- What is the difference between model parallelism and data parallelism? - Quora

(www.quora.com) - Training with Multiple GPUs Using Model Parallelism - MXNet documentation

(mxnet.io)

Momentum

Momentum is commonly understood as a variation of stochastic gradient descent, but with one important difference: stochastic gradient descent can unnecessarily oscillate, and doesn’t accelerate based on the shape of the curve.

In contrast, momentum can dampen oscillations and accelerate convergence.

Momentum was originally proposed in 1964 by Boris T. Polyak.

Related Terms

References

Moore-Penrose Pseudoinverse

Multi-Armed Bandit

Related Terms

Multidimensional recurrent neural network (MDRNN)

References

- Hessian-free Optimization for Learning Deep Multidimensional Recurrent Neural Networks

(arxiv.org) - Multi-Dimensional Recurrent Neural Networks

(arxiv.org)

Multilayer LSTM

Multinomial distribution

Related Terms

Multinomial mixture model

A multinomial mixture model is a mixture of multinomial distributions.

The Wikipedia page for the multinomial distribution notes the following regarding the relationship between the multinomial distribution and the categorical distribution:

Note that, in some fields, such as natural language processing, the categorical and multinomial distributions are conflated, and it is common to speak of a “multinomial distribution” when a categorical distribution is actually meant. This stems from the fact that it is sometimes convenient to express the outcome of a categorical distribution as a “1-of-” vector (a vector with one element containing a 1 and all other elements containing a 0) rather than as an integer in the range ; in this form, a categorical distribution is equivalent to a multinomial distribution over a single trial.

This is important to remember when reading about categorical mixture models versus multinomial mixture models.

Related Terms

References

- Multinomial distribution - Wikipedia

(en.wikipedia.org) - Mixture model - Wikipedia

(en.wikipedia.org)

Multiple crops at test time

Multi-crop at test time is a form of data augmentation that a model uses during test time, as opposed to most data augmentation techniques that run during training time.

Broadly, the technique involves:

- cropping a test image in multiple ways

- using the model to classify these cropped variants of the test image

- averaging the results of the model’s many predictions

Multi-crop at test time is a technique that some machine learning researchers use to improve accuracy at test time. The technique found popularity among some competitors in the ImageNet Large Scale Visual Recognition Competition after the famous AlexNet paper, titled ImageNet Classification with Deep Convolutional Neural Networks, used the technique.

References

Mutual information

References

N-ary Tree LSTM

References

Named Entity Recognition in Query (NERQ)

Named Entity Recognition in Query is a phrase used in a research paper and patent from Microsoft, referring to the Named Entity Recognition problem in web search queries.

References

- Named Entity Recognition in Query

(soumen.cse.iitb.ac.in) - Named entity recognition in query - Google Patents

(www.google.com)

Named Entity Recognition (NER)

Related Terms

References

Narrow convolution

Related Terms

Natural Language Processing

Related Terms

- Chunking

- Coreference resolution

- Embed, Encode, Attend, Predict

- Entailment

- Language segmentation

- Named Entity Recognition (NER)

- Part-of-Speech (POS) Tagging

- Recurrent Neural Network Language Model (RNNLM)

- SyntaxNet

NCHW

NCHW is an acronym describing the order of the axes in a tensor containing image data samples.

- N: Number of data samples.

- C: Image channels. A red-green-blue (RGB) image will have 3 channels.

- H: Image height.

- W: Image width.

NCHW is sometimes referred to as a channels-first layout.

Related Terms

Negative Log Likelihood

Related Terms

References

- Why Minimize Negative Log Likelihood? - Quantivity

(quantivity.wordpress.com) - I am wondering why we use negative (log) likelihood sometimes? - Cross Validated

(stats.stackexchange.com)

Negative Sampling

Nested Chinese Restaurant Process

Related Terms

Neural checklist model

Neural checklist models were introduced in the paper Globally Coherent Text Generation with Neural Checklist Models by Kiddon et al.

A neural checklist model is a recurrent neural network that tracks an agenda of text strings that should be mentioned in the output.

This technique allows the neural checklist model to generate globally coherent text, as opposed to text from traditional RNNs that is only locally coherent.

The original paper describes applying the neural checklist model to recipes and dialogue responses for information systems, where there already exists a pre-existing notion of all the topics that should be present in a natural language response.

References

Neural network

Related Terms

- Activation function

- Connectionism

- Convolutional Neural Networks (CNN)

- Hopfield Network

- Internal covariate shift

- Neural Turing Machine (NTM)

- Rectified Linear Unit (ReLU)

- Recurrent Neural Network Language Model (RNNLM)

- Recurrent Neural Network

- Recursive Neural Network

- Siamese neural network

- Time-delayed neural network

- Weight sharing

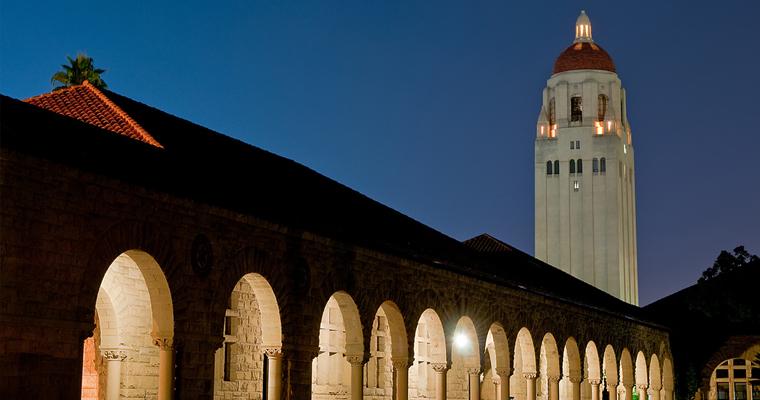

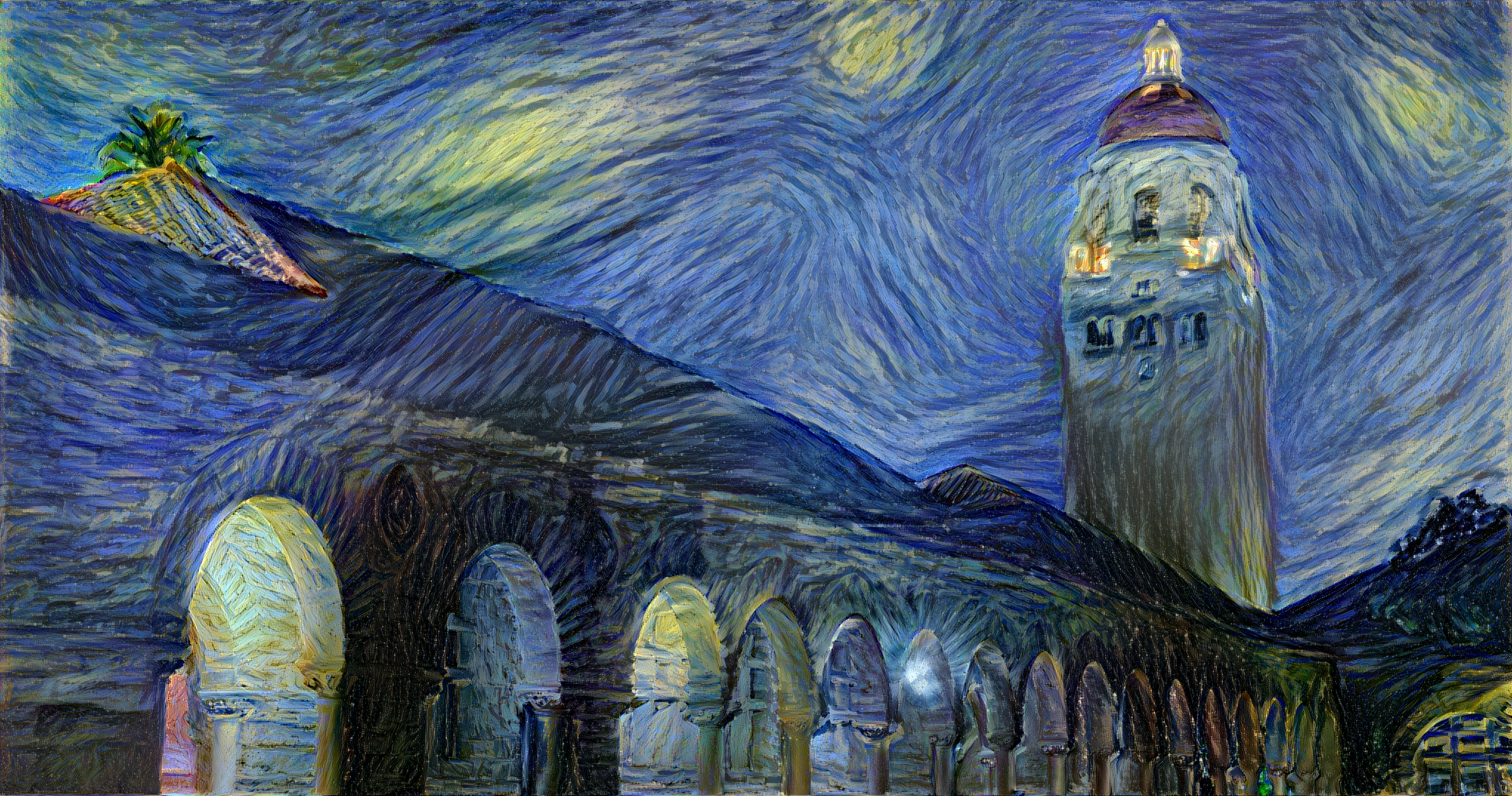

Neural style transfer

Neural style transfer refers to the use of neural networks to apply the style of a style image to a content image.

(Most of the literature in neural style transfer refers to images, but recent research has explored the use of neural style transfer techniques to other domains.)

Examples

The below examples of neural style transfer are from Justin Johnson of Stanford University. The top left image is Vincent van Gogh’s The Starry Night and the top right image is a photograph of Stanford’s Hoover tower. The bottom image is generated by Justin Johnson using neural style transfer.

Top Left: Vincent Van Gogh’s famous artwork titled The Starry Night

Top Right: A photograph of Hoover Tower on the Stanford University campus

Bottom: A synthetic image generated by Justin Johnson that depicts Stanford University’s Hoover Tower using the style of Vincent van Gogh’s The Starry Night

History

In the 1990s, some computer science researchers began exploring non-photorealistic rendering, which offered ways to generate images inspired by the style and texture of specific artwork (such as oil paintings). However, these techniques were generally limited in the types of styles they could generate target images for.

This limitation began to disappear in 2015 with the publication of the paper A Neural Algorithm of Artistic Style. The paper’s authors demonstrated the use of convolutional neural networks to generate images inspired by many different styles.

Loss Function

Broadly, neural style transfer is the technique of using gradient descent to minimize the loss function over the following variables:

- – our input content image. In the above example, this would be the photograph of Stanford University’s Hoover Tower.

- – our style image. In the above example, this would be van Gogh’s The Starry Night.

- – our generated image. In the above example, this is the generated image of Hoover Tower in the style of The Starry Night.

We intend to find a value of that minimizes where:

- is a function that compares the difference of content between and .

- is a function that compares the difference of style between and .

- and are weighting factors to balance whether the generated image favors accurate content or accurate style.

References

- neural_style: Torch implementation of neural style algorithm -- Justin Johnson

(github.com) - A Neural Algorithm of Artistic Style

(arxiv.org) - Neural Style Transfer: A Review

(arxiv.org) - Non-photorealistic rendering - Wikipedia

(en.wikipedia.org)

Neural Turing Machine (NTM)

Neural Turing Machines (NTM) consists of a RNN (commonly with LSTM), and a memory bank, where the neural network can make write and read operations. By making each operation of the NTM differentiable, it can be trained efficiently trained with gradient descent.

The main idea of the NTM is to use the memory bank – a large, addressable memory – to give a memory to the RNN so that it can read and write to, yielding a practical mechanism to learn programs. The NTM has been shown to be able to infer simple algorithms, such as copying, sorting and associative recall from input and output examples.

References

NHWC

NHWC is an acronym describing the order of the axes in a tensor containing image data samples.

Software frameworks for training machine learning models–such as TensorFlow–use the acronym NHWC

- N: Number of data samples.

- H: Image height.

- W: Image width.

- C: Image channels. A red-green-blue (RGB) image will have 3 channels.

NHWC is sometimes referred to as a channels-last layout.

Related Terms

No Free Lunch (NFL) theorem

The “No Free Lunch” theorem is the idea that all optimizers perform equally well when averaged across all possible optimization problems.

References

- Doesn't the NFL theorem show that black box optimization is flawed? - Black Box Optimization Competition

(bbcomp.ini.rub.de) - No free lunch theorem - Wikipedia